Talk:Normal distribution/Archive 4

| This is an archive of past discussions. Do not edit the contents of this page. If you wish to start a new discussion or revive an old one, please do so on the current talk page. |

| Archive 1 | Archive 2 | Archive 3 | Archive 4 |

Kurtosis Clarity

Is there a way to make clear that the kurtosis is 3 but the excess kurtosis (listed in table) is 0? Some readers may find this confusing, as it isn't explicitly labeled.

- Well, it looks clunky, but I changed it. PAR 01:48, 15 November 2006 (UTC)

huh?-summer

what? PAR 00:42, 14 December 2006 (UTC)

I am not a matemathician or a statistician but in fact I came to this discussion page exactly to understand this. Kurtosis is indicated as 3 in many other sources, including http://www.wolframalpha.com/input/?i=normal+distribution , and the 0 value in this page is confusing for me --Mantees de Tara (talk) 20:37, 25 December 2009 (UTC)

- Normal distribution has a kurtosis of 3, and an excess kurtosis of 0. The bar on the right is with "kurtosis: 0" is imprecise and potentially confusing imho. Mgunn (talk) 01:10, 13 May 2010 (UTC)

- Many sources (including the Wikipedia) define the kurtosis as the ratio of fourth cumulant to the square of the second cumulant. This is the same as fourth central moment divided by the square of variance and minus three. Those sources do not use term “excess kurtosis” at all. The confusion would probably disappear if you follow the link “kurtosis” in the infobox to read what that term actually means. // stpasha » 02:56, 13 May 2010 (UTC)

A totally useless article for the majority of people

I consider myself a pretty smart guy. I have a career in IT management, a degree, and 3 technical certifications. Granted, I am certainly not brilliant, nor am I an expert in statistics. However, I was interested in learning about the normal curve. I have a only a fair understanding of standard deviation (compared with the average person who has no idea what SD is) but wanted to really "get it" and wanted to know why the normal curve is so fundamental. Basically, I wanted to learn. So I googled "normal curve". As always, Wiki comes up first. But sadly, as (not always, but usually), the article is hardly co-herent. This article to me was written by the PhD for the PhD. it is not condusive to learning...it is condusive to impressing. It reminds me of a graduate student trying to impress a professor "look Dr. Stat, look at my super complex work". This article has defeated the purpose of wiki to me, which is to educate people. Now I will go back to Google and search for another article on the normal curve that was written for the average person who wants to learn, rather than the stat grad. Wiki is chronic for this. Either articles are meant as a politically biased rant (so much bias here), or written for a "niche" community (like this article). But so few of them are actually written to introduce, explain, and heighten learning. I read 2 paragraphs of this article, and that was more than enough. You might think I'm just too stupid to understand, and thats fine. But when I make contributions to articles that are about internet protocols and networking, I make sure that the layperson is kept in mind. This was not done here. What is so hard...seriously...about just introducing a topic and providing a nice explanation for people who do not have statistical degrees?—The preceding unsigned comment was added by 24.18.108.5 (talk) 19:57, 1 May 2007 (UTC).

- I completely agree with you! The normal distribution is a simple concept. The current editors have completely destroyed the article by trying to present it as complex as possible! If you want to understand the normal distribution forget the wikipedia article and read my next three sentence. The normal distribution is the outcome distribution of a random process. For example the number of heads that you get when you toss a random coin many times. 1) toss a random coin 10 times 2) write down the number of heads 3) repeat the previous two steps 100 times 4) plot the number of heads for the 100 trials. The End !!--92.41.17.172 (talk) 13:29, 28 August 2008 (UTC)

- I think it was written to be understood by people who do not already know what the normal distribution is, and it succeeds in being comprehensible to mathematicians who don't know what the normal distribution is, and also to anyone who's had undergraduate mathematics and does not know what the normal distribution is.

- Granted, some material at the beginning could be made comprehensible to a broader audience, but why do those who write complaints like this always go so very far beyond what they can reasonably complain about, saying that it can be understood only by PhD's or only by people who already know the material?

- And why do they always make these abusive suggestions about the motives of the aauthors of the article, saying it was intended to IMPRESS people, when an intention to impress people would (as in the present case) clearly have been written so differently?

- I am happy to listen to suggestions that articles should be made comprehensible to a broader audience, if those suggestions are written politely (instead) and stick to that topic instead of these condescending abusive paranoid rants about the motives of the authors. Michael Hardy 01:00, 2 May 2007 (UTC)

- Another reply.

- I agree with you that many mathematics articles do not do a good enough job of keeping things simple. Sometimes I even think that people go out of their way to make things complicated. So, I empathize with you.

- My advice to you is that after you do your research, ir would really be awesome if you came here and shared with us some paragraphs that really made you "get it". The best person to improve an article that "is written by PhDs" is you! One thing to keep in mind though is that an encyclopaedia has to function as a reference first and foremost. It's not really a tutorial, which is what you're looking for. Maybe in a few years the wikibooks on statistics will be better developed. As a reference, I think this page works well. (For example, suppose that you want to add two normal distributions, then the formula is right there for you.)

- Perhaps if you're struggling with the introduction, it occurred to me that you might not know what a probability distribution is in the first place. You might want to go to probability theory or probability distribution to get the basics first. One of the nice things about wikipedia is that information is separated into pages, but it means that you have to click around to familiarize with the background as it's not included in the main articles. MisterSheik 01:15, 2 May 2007 (UTC)

MisterSheik, do you have ANY evidence for your suspicion that anyone has ever gone out of their way to make things complicated? Can you point to ONE instance?

I've seen complaints like this on talk pages before. Often they say something to the general effect that:

- The article ought to be written in such a way as to be comprehensible to high-school students and is written in such a way that only those who've had advance undergraduate courses can understand it.

Often they are right to say that. And in most cases I'd sympathize if they stopped there. But all too often they don't stop there and they go on to attribute evil motives to the authors of the article. They say:

- The article is written to be understood ONLY by those who ALREADY know the materials;

- The authors are just trying to IMPRESS people with what they know rather than to communicate.

Should I continue to sympathize when they say things like that? Can't they suggest improvements in the article, or even say there are vast amounts of material missing from the article that should be there in order to broaden the potential audience, without ALSO saying the reason those improvements haven't been made already is that those who have contributed to the article must have evil motives? Michael Hardy 01:51, 2 May 2007 (UTC)

Hi Michael. I think that the user's complaint was definitely worded rudely, and so I understand your indignation. It's not like he's paying for some service, but he's looking for information and then complaining that it isn't tailored for him. So, rudeness aside.

I'm going to go through some pages, and you can tell me what you think. (Apologies in advance to the contributors of this work.) Look at this version of mixture model: [1]. Two meanings? They're the same meaning.

But, what about this? [2] versus now pointwise mutual information.

There's a lot of this wordiness going on as well: [3], [4]], [5], [6], [7], [8] and [9].

And equations for their own sake: [10] and [11] (looks like useful information at first, but it's just an expansion of conditional entropy.)

Maybe all of the examples aren't perfect, but some are indefensible.

I like to see things explained succinctly, but making the material instructional instead of a making it function as a good reference is a bad idea, I think. And that's one of the things I told the person: find the wikibook.

But I still haven't answered your point about

- The article is written to be understood ONLY by those who ALREADY know the materials;

- The authors are just trying to IMPRESS people with what they know rather than to communicate.

Maybe it's not happening intentionally, or even consciously, but how do people produce some of the examples above without first snapping into some kind of mode where they are trying to speak "like a professor does"?

MisterSheik 03:33, 2 May 2007 (UTC)

- I'm afraid I don't understand your point. You've shown examples of articles that are either incomplete or in some cases inefficiently expressed, but how is any of this even the least bit relevant to the questions you were addressing? I said I'd seen it claimed that some articles are written to be understood only by those who already know the material; you have not cited anything that looks like an example. I said I'd seen it claimed that some articles were written as if the author was trying to impress someone. Your examples don't look like that. You say "maybe it's not happening intentionally", but you seem to act as if the articles you cite are places where it's happening. I don't see it. What in the world do you mean by speaking like a professor, unless that means speaking in a way intended to convey information? Are you suggesting that professors typically speak in a manner intended simply to impress people? Or that professors speak in a manner that communicates only to those who already know the material? Maybe you can mention some such cases, but you're actually acting as if that's typical.

- Could you please try to answer the questions I asked? Do you know any cases of Wikipedia articles where the author deliberately tried to make things complicated? You said you did. Can you cite ONE? Michael Hardy 21:57, 3 May 2007 (UTC)

-

- PS: In mixture models: No, they're not the same thing. Both involve "mixtures", i.e. weighted averages, but they're not the same thing. Michael Hardy 21:57, 3 May 2007 (UTC)

Hi Michael, it's fine to say that these ideas are inefficiently expressed, but why are they inefficiently expressed? I think it's because writers are subconsciously aiming to make things difficult in order to achieve a certain tone: the one that they associate with "a professor". In other words, I think that people are imagining a target tone rather than directly trying to convey information succinctly. ps they are both examples of a "mixture model", which has one definition ;) MisterSheik 23:00, 3 May 2007 (UTC)

- Well I think it's because they just haven't worked on the article enough. If you're going to make claims about their subconscious motivations, you have a heavy burden of proof, and you haven't carried it, so I'm not convinced, to say the least. Are you going to make assertions about what you believe, or are you going to try to convince me? And is that relevant to this article? Is there anything in this article that looks as if someone's trying to make things difficult for the reader, consciously or otherwise? It looks as if it's not written for an audience of intelligent high-school students, and possibly that could be changed with more work, but it is written for mathematicians and others who don't know what the normal distribution is. And you speak of what they associate with "a professor". You know what you associate with a professor; how would you know what others associate with a professor? The simple fact is, it's harder to write for high-school students than for professionals. Don't you know that? It takes more work, and the additional work has not been done, yet. Are you saying people did not do that additional work because they're trying (subconsciously, maybe?) to make things difficult for the reader? What makes you think that? Be specific. When people try to feign sounding like a professor, they typically misuse words in ways that look stupid to those who actually know the material. "An angry Martin Luther nailed 95 theocrats to a church door." That sort of thing. Using words in the wrong way and unintentionally sounding childish. That's not happening in this article. It's also not happening in the ones you cited. Some parts of those are clumsily written; some parts are hard to understand because there's not enough explanation there. This article is generally well-written, and that would be impossible if someone were trying to fake sounding like a professor.

- You're shooting your mouth off a lot, telling us about people's subconscious motivations, as if we're supposed to think you know about those, and it's really not proper to do that unless you're going to at least attempt to give us some reason to think you're right about this. Michael Hardy 23:45, 3 May 2007 (UTC)

Whoa. I'm not "shooting my mouth off". I made it really clear that it was my impression that sometimes I think that authors make things difficult to understand. How is that "improper". I'm just sharing my opinions about the motivations of authors unknown. No one is attacking you. I don't have a "heavy burden of proof", because they're just my opinions and you're entitled to disagree. I showed you some examples of what convinced me and asked you what you thought. Ask yourself if you're getting a bit too worked up over nothing here?

(On the other hand, when you use rhetoric like "Don't you know that?", I can't see that you're kidding, and so it sounds like you are shooting your mouth off.)

Regarding this article, I think its fine. I guess the "overview" section could be renamed "importance" since it's not an overview at all. And, the material could be reorganized a little bit since occurrence and importance have similar information, but maybe not.

You make a really good point about people feigning sounding like a professor, and we have both seen that kind of thing. That's not what I meant though. I was trying to get at professionals or academics who know the material going out of their way to word things awkwardly. Let's take one example: "A typical examplar is the following:" Are we supposed to believe that someone actually uses that kind of language day-to-day? Someone is trying to impress the reader with his vocabulary, or achieve an air of formality, or what? Whatever it is, it's bad writing that, due to its unnaturalness, seems intentional (to me). I'm not saying someone is intentionally trying to trip up the reader. I'm saying that someone is trying to achieve something other than inform the reader in the most succinct way. I was trying to illustrate with my examples "undue care" for the presentation of information. MisterSheik 00:12, 4 May 2007 (UTC)

- I didn't think you were attacking me, but I did think you were asking me to believe something far-fetched without giving reasons. If you're talking about wordiness, I think it often takes longer to express things more simply. Michael Hardy 00:21, 4 May 2007 (UTC)

- yeh i agree this is excessively far too technichal for those who have no or little understanding. I've worked in Quality assurance for 12 years and used normal distributions alot and don't see much mention of six sigma, cp, cpk, ppm, USL, UWL, LSL, LWL, inter quartile ranges, gauge R&R etc etc this article does appear geared towards mathematic graduates and not very useful to many using it in the "real world". I did learn quite a bit of the maths while achieving my green belt in six sigma, but once putting it in practice don't really need to know alot of it and alot of this article has gone straight over my head lol. In the real world theres plenty of software that will automatically calculate the data for you and produce the graphs providing you understand the correct inputs and variables ie Minitab. More and more in the manufacturing industry these stats are not only used by quality engineers like myself but general operators are expected to understand what a curve should be looking like, std dev/mean targets, good/bad cpk levels etc i'm talking people with little or no qualifications. this article will be of no use whatsoever to them imho.

Basically a normal distribution is a curve which shows the distribution of data for something measurable. You will have a target mean (average) to aim for to ensure your distribution is maintained within the tolerance levels (LSL/USL) and have warning levels (LWL/UWL) which indicate when the process is going out of control and action needs to be taken to bring it back in control, limiting any rejects outside the LSL/USL (OK that bit is control charts rather than normal distribution but still related). Cp is a measure of the process variation about the mean (the higher the better towards 3), with cpk a measure of the process variation about a target mean. A Cp of 2 would be ok, but if the mean of the data is 20 when the target mean is 40 then thats not so good as it shows you have a controllable process but its all out of spec likely due to some incorrect setting. PPM part per million indicating how parts you are producing out of spec per million parts produced. —Preceding unsigned comment added by 77.102.17.0 (talk) 01:00, 5 December 2009 (UTC)

Very important topic

{{Technical}}

Lead to article is excellent, and the first few sections are readable, but topic is essential to a basic understanding of many fields of study and therefore a special effort should be made to improve the accessibility of the remaining sections. 69.140.159.215 (talk) 13:00, 12 January 2008 (UTC)

- Do the remaining sections need to be more accessible? I think they are largely technical or esoteric in nature so most people dont actually need to be able to understand them. If it is required then I would argue that further education in maths is required rather than making the sections more accessible.

- I think accessibility needs to be compared to clarity. If they are clear (albeit to a university educated individual) then it is sufficient.schroding79 (talk) 00:30, 25 June 2008 (UTC)

An Easy Way to Help Make Article More Comprehensible

Correct me if i am wrong but an easy way to make it easier for people highchool through Ph.D level would be to leave as is but work though easy examples in the beginning. —Preceding unsigned comment added by 69.145.154.29 (talk) 23:29, 10 May 2008 (UTC)

I was never comfortable calling this distribution a "normal" distribution, too much baggage comes with the word "normal". However, what I think what might help more people get a handle on this probability distribution, is to try and describe how the word "normal" got associated with it. Fortran (talk) 01:39, 6 April 2009 (UTC)

Along time since i learnt the history but think "normal" refers to the actual shape, as the bell shape for a normal collection of data will be a nice even bell shape curve, aka normal. Whereas if its skewed in some way due to some unknown variable then you are not achieving the target of a normal distribution curve? —Preceding unsigned comment added by 77.102.17.0 (talk) 01:22, 5 December 2009 (UTC)

The Normal distribution is called the "Normal" distribution because several hundred years ago many people who were studying distributions noticed that in a large number of cases, the distributions looked similar. Thus if the distribution looked like most others, it was called "Normal." What Fortran is saying is that we now know the reason why many distributions all looked "Normal" (the Central Limit Theorem), and discussing how sampling and the CLT can lead to having a Normal distribution can be enlightening. —Preceding unsigned comment added by 141.211.66.134 (talk) 16:33, 10 March 2010 (UTC)

Progress towards GA quality

Given the suggestion on the edit descriptions list that this article might be pushed towards GA status, it would be good if readers/editors would set down some areas for improvement. Any more suggestions as to what is needed? Melcombe (talk) 09:10, 22 September 2009 (UTC)

(above comment split to allow addition of general discussion of changes needed for article) Melcombe (talk) 08:58, 25 September 2009 (UTC)

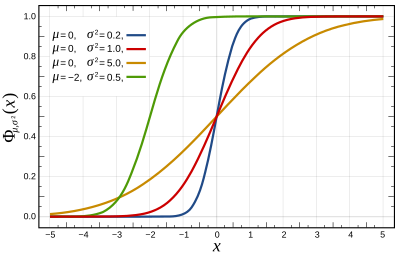

Pictures

Graphs should be improved too — the curves should be more thicker so that they are better visible; and also the labels violate MOS, as certain numbers are typeset in italics.

Done. … stpasha » 21:23, 5 October 2009 (UTC)

Done. … stpasha » 21:23, 5 October 2009 (UTC)

Short sections

No section should consist of just a single test — they should be either expanded, or merged with some other sections.

Done I merged all short sections with similar topics. … stpasha » 21:23, 5 October 2009 (UTC)

Done I merged all short sections with similar topics. … stpasha » 21:23, 5 October 2009 (UTC)

Too technical

The "too technical" tag shown on this talk page, but which might be missed; Melcombe 09:10, 22 September 2009 (UTC)

- The {{technical}} tag was placed by User:Velho on 10th of August, 2008. Back then the article indeed looked a little bit more technical. So it may be appropriate to remove the tag now (or maybe not) … stpasha » 16:35, 22 September 2009 (UTC)

Done. The article now has formula-free lead section, and easy-going introduction section; so I have removed the {{technical}} tag. The rest of the article is of course quite mathematical, but the math is unavoidable. … stpasha » 19:16, 3 October 2009 (UTC)

Done. The article now has formula-free lead section, and easy-going introduction section; so I have removed the {{technical}} tag. The rest of the article is of course quite mathematical, but the math is unavoidable. … stpasha » 19:16, 3 October 2009 (UTC)

Heights of US adult males

I removed the following paragraph from the lead, since the lead is already too long, and it'll be expanded even more to include references to multivariate normal and complex normal distributions, and Gaussian stochastic processes.

The normal distribution can be used to describe, at least approximately, any variable that tends to cluster around the mean. For example, the heights of adult males in the United States are roughly normally distributed, with a mean of about 70 inches. Most men have a height close to the mean, though a small number of outliers have a height significantly above or below the mean. A histogram of male heights will appear similar to a bell curve, with the correspondence becoming closer if more data are used.

The example can still be used somewhere later in the article, although we don’t have a conceivable “introduction” section before we go into hard math. … stpasha » 21:35, 23 September 2009 (UTC)

- It should obviously be put back. there is no need to include references to multivariate normal and complex normal distributions, and Gaussian stochastic processes in the lead. Melcombe (talk) 14:41, 24 September 2009 (UTC)

- Not obvious to me though. The WP:LEAD states that the lead should generally be no longer than 3−4 paragraphs. Also the article is titled “Normal distribution”, not “Univariate normal distribution”. Since it focuses mainly on the scalar case, it must provide clear directions as to where to find the information on multivariate Gaussian distribution, since it is not obvious. In general use, the term “normal distribution” is not restricted to univariate case, for example we say that a vector is distributed normally with 0 mean and variance matrix σ²In, we don’t say it’s distributed “multivariate normally”. Some authors even generalize normal distribution to ∞-dimensional Hilbert spaces, this definition could be reflected at least somewhere, maybe in the “multivariate” article. … stpasha » 18:09, 24 September 2009 (UTC)

- Removing the only generally understandable material from the lead on the ground that there is no room for it is unhelpful to the purpose of WP. The lead is meant to be generally understandable. The maths clearly needed to be split off, and I have done so. Generalisations and connected topics can be dealt with in 3 ways: in the main article text, in the "see also" section or in specific template that would appear at the very head of the article. Melcombe (talk) 09:04, 25 September 2009 (UTC)

Done. alright so the paragraph is restored, and disambiguation hatnote established. As there are no further objections, I'm closing this subdiscussion. … stpasha » 21:07, 5 October 2009 (UTC)

Done. alright so the paragraph is restored, and disambiguation hatnote established. As there are no further objections, I'm closing this subdiscussion. … stpasha » 21:07, 5 October 2009 (UTC)

- Removing the only generally understandable material from the lead on the ground that there is no room for it is unhelpful to the purpose of WP. The lead is meant to be generally understandable. The maths clearly needed to be split off, and I have done so. Generalisations and connected topics can be dealt with in 3 ways: in the main article text, in the "see also" section or in specific template that would appear at the very head of the article. Melcombe (talk) 09:04, 25 September 2009 (UTC)

- Not obvious to me though. The WP:LEAD states that the lead should generally be no longer than 3−4 paragraphs. Also the article is titled “Normal distribution”, not “Univariate normal distribution”. Since it focuses mainly on the scalar case, it must provide clear directions as to where to find the information on multivariate Gaussian distribution, since it is not obvious. In general use, the term “normal distribution” is not restricted to univariate case, for example we say that a vector is distributed normally with 0 mean and variance matrix σ²In, we don’t say it’s distributed “multivariate normally”. Some authors even generalize normal distribution to ∞-dimensional Hilbert spaces, this definition could be reflected at least somewhere, maybe in the “multivariate” article. … stpasha » 18:09, 24 September 2009 (UTC)

- It should obviously be put back. there is no need to include references to multivariate normal and complex normal distributions, and Gaussian stochastic processes in the lead. Melcombe (talk) 14:41, 24 September 2009 (UTC)

Exponential function

I think we should use only one notation for the exponential function. As you know, there is exp(x) and e^x. I skimmed through the article and found that exp(x) is more common. Tomeasy T C 06:51, 25 September 2009 (UTC)

- In mathematics ex is a standard notation, whereas exp(x) is an accepted substitute in cases when the expression x is itself complicated. Exponential functions with other bases, such as 2x or 10x do not have analogous “inline” notation. This is why primary notation for exponent function should remain ex. However there is no reason why we cannot intermix different notations in the same article, it is not a violation of MoS, and mathematicians do that all the time. … stpasha » 22:49, 25 September 2009 (UTC)

Done. I have replaced all the occurences of exp(…) with the shorter e… notation. … stpasha » 21:03, 5 October 2009 (UTC)

Done. I have replaced all the occurences of exp(…) with the shorter e… notation. … stpasha » 21:03, 5 October 2009 (UTC)

repeated pictures

The two images shown in the infobox are repeated further down. I think we should remove them. Any thoughts? Tomeasy T C 22:33, 25 September 2009 (UTC)

- They should probably appear at least once. The sections on the pdf and the cdf seem like appropriate places for them. Michael Hardy (talk) 22:38, 25 September 2009 (UTC)

Done. … stpasha » 20:06, 27 September 2009 (UTC)

Done. … stpasha » 20:06, 27 September 2009 (UTC)

Probability density function

This subsection is largely a repetition of the section Definition. I would like to include the additional content of the subsection into the section, and remove the subsection. What do you think? Tomeasy T C 22:36, 25 September 2009 (UTC)

- Both sections should remain. We want to introduce the topic slowly before descending into hard math. Section “definition” is intended to describe the normal distribution in easy terms, whereas “probability density function” can be more complicated. It is also possible that we will include into “definition” section something about the cdf, although that is not very easy to do while maintaining the goal of keeping this section as simple as possible. … stpasha » 20:05, 27 September 2009 (UTC)

Done. both sections remain, although now they have distinctly different content. … stpasha » 19:51, 3 October 2009 (UTC)

Done. both sections remain, although now they have distinctly different content. … stpasha » 19:51, 3 October 2009 (UTC)

Purpose of the constant (1/sqr(2pi))

The article states the formula for a normal curve is as follows: But what is the origin/purpose of the constant: ? Might be useful to include that info. --Steerpike (talk) 20:32, 26 September 2009 (UTC)

Done. … stpasha » 19:59, 27 September 2009 (UTC)

Done. … stpasha » 19:59, 27 September 2009 (UTC)

Should History section come before Definition?

I think it should. For example because it has less formulas in it :) … stpasha » 21:08, 27 September 2009 (UTC)

Done. … stpasha » 16:45, 2 October 2009 (UTC)

Done. … stpasha » 16:45, 2 October 2009 (UTC)

Complex normal

- There has been a suggestion during previous peer-review that info about complex Gaussian r.v. was included here. Although I'm not sure what a complex Gaussian is… if (X,Y) are jointly normal then we can say that Z=X+iY is complex Gaussian, but then the variance of such random variable is a 3-component quantity, so I'm not sure how it is supposed to be described in the language of complex variables? Well if anybody knows a good book on this topic, let me know.

- ... stpasha » talk » 16:35, 22 September 2009 (UTC)

- As in the article, the usual definition of complex normal has X,Y independent and equal variance. The book by Brillinger BR (1975) Time Series: Data Analysis ang Theory, Holt Rinehart & Winston ISBN 0-03-076975-2 .. has a very brief section on the complex multivariate normal distribution. The reason for dealing with this special case relates to Fourier analyses of time series. The variance of a complex rv is defined via a product of conjugates and so is real. You might see also [12] for some that is online and in sophisticated maths. An online search leads to at least one paper that deals with the unrestricted case but it is not publically accessible. Melcombe (talk) 17:13, 22 September 2009 (UTC)

- I've checked this Andersen et al. book, and they indeed define complex normal distribution as symmetric one. However I feel that such definition is not entirely adequate, since it does not give rise to the Central limit theorem for complex r.v's. In particular, if {zt} are zero-mean complex random variables, then we would like to say that the sum T−1/2∑zt converges in distribution to a complex normal distribution; in this case the limiting distribution need not be symmetric if E[Re[z]Im[z]]≠0.

- There is a paper by van der Bos (1995) “The multivariate complex normal distribution — a generalization”, and then also another article by Picinbono (1996) which consider a generic form of complex normal distribution. However this all probably merits its own separate article Complex normal distribution, just as we have separate Multivariate normal distribution already. ... stpasha » 16:57, 23 September 2009 (UTC)

- It doesn't matter what you think is adequate. The fact is that all the literature takes "complex normal distribution" (when not given general some form of "generalised" tag) to mean the equal-variance, uncorrelated normal case and articles here are meant to reflect that. In addition, moving away from the standard usage would conflict with the need to define the complex Wishart distribution in the standard way for that. Melcombe (talk) 14:41, 24 September 2009 (UTC)

- The theory of complex Gaussian distribution was developed by Goodman (1963), and he defines “A complex Gaussian random variable is a complex random variable whose real and imaginary parts are bivariate Gaussian distributed.” Later on he admits that “In the present paper the phrase ‘multivariate complex Gaussian distribution’ is restricted to that special case”, and proceeds to describe the circular Gaussian because it is easier and can be expressed in terms of Wishart distribution.

- Van den Bos (1995) however writes: “Since its introduction, the multivariate complex normal distribution employed in the literature has been a special case: the covariance matrix associated with it satisfies the number of restrictions … The reason given in [Wooding 1956] for these restrictions is closely connected with the particular application studied. … These developments have probably convinced later authors that this specialized complex normal distribution is the most general one.”

- The article on complex normal distribution must reflect both the restricted circular distribution, and the unrestricted generic case. … stpasha » 18:09, 24 September 2009 (UTC)

- This is quite heated debate over a non-existent (yet) article :) Now I'm obviously not an expert on the subject, having learned about the topic only a couple of days ago, but it seems to me that since we are writing an encyclopedia then we have to present both points of view and both definitions. The alternative would be to make two distinct articles “circular complex gaussian distribution” and “general complex gaussian distribution”, which eventually someone will merge anyways. … stpasha » 22:57, 25 September 2009 (UTC)

Done. The subsection has been moved out to the Complex normal distribution article. … stpasha » 20:35, 13 October 2009 (UTC)

Done. The subsection has been moved out to the Complex normal distribution article. … stpasha » 20:35, 13 October 2009 (UTC)

Notation suggestion

I propose to uniformly replace symbol φ (the pdf of the standard normal distribution) with ϕ (in LaTeX: \phi, in HTML: ϕ). The main reason for this change is to differentiate somehow standard normal distributions from characteristic functions, which are also denoted with φ. … stpasha » 21:35, 7 October 2009 (UTC)

Done. Since there seems to be no objections, I'm going to perform this change. … stpasha » 20:27, 11 October 2009 (UTC)

Done. Since there seems to be no objections, I'm going to perform this change. … stpasha » 20:27, 11 October 2009 (UTC)

Estimation

This section in the article seems to be too biased towards the unbiasedness of estimation. At the same time it misses some important info about the t-statistic and construction of confidence intervals. Also the “maximum likelihood estimation” section is bloated — the detailed derivation is already present in the maximum likelihood article and probably doesn’t need to be repeated here. … stpasha » 02:07, 29 November 2009 (UTC)

Done. … stpasha » 09:11, 3 December 2009 (UTC)

Done. … stpasha » 09:11, 3 December 2009 (UTC)

History section

The history section could be expanded. For once, it doesn't mention the important contributions of Maxwell, when he discovered that gas particles, being constantly subjected to bombardment from the other gas particles, will have their velocities distributed as 3d multivariate Gaussian rv's. I believe this discovery to be important because it demonstrated that normal distribution occurs not only as a mathematical approximation in games of chance or as a convenient tool in least squares analysis, but also exists in nature. … stpasha » 21:52, 5 October 2009 (UTC)

Done. // stpasha » 04:49, 5 March 2010 (UTC)

Done. // stpasha » 04:49, 5 March 2010 (UTC)

sum of ... factors ?

any variable that is the sum of a large number of independent factors

A sum of factors?? Am I getting this very wrong, or is this sentence indeed ill phrased. I would say a sum of summands or a product of factors, but still I would not really understand what this sentence tries to say. Please someone who understands the content, judge whether the wording sum of ... factors is correct. Tomeasy T C 22:25, 25 September 2009 (UTC)

- "Factor" is the wrong word; I've changed it to "terms". Michael Hardy (talk) 22:36, 25 September 2009 (UTC)

- Thanks that solves the first issue I had with this sentence. Now it says:

- any variable that is the sum of a large number of independent terms is likely to be normally distributed.

- I find the combination of the words any and likely highly illogical. If it is just likely, then how can it be for any variable? Tomeasy T C 08:33, 26 September 2009 (UTC)

OK, I see the logical flaw has been erased by somebody. Now that semantically the statement is correct, let's focus on the content. Any variable that is the sum of a large number of independent terms is distributed approximately normally. Really, is that so? I would guess most variables are not distributed at all, because they depend on independent but deterministic terms. I see that variable is linked to random variable. The qualifier random is key here to ensure the statement is not ridiculous. Therefore, the text must show this. Tomeasy T C 07:06, 2 October 2009 (UTC)

- Yeah, there is also the fact that it is a misinterpretation of the CLT which regards the mean, not the entire distribution. O18 (talk) 16:03, 2 October 2009 (UTC)

- The CLT pertains either to the mean or to the sum. Trivially if either of those is normally distributed then so is the other. How do you find something about "the entire distribution" (whatever that means) in the statement that the sum is normally distributed? Michael Hardy (talk) 21:02, 8 October 2009 (UTC)

Yes, sum of factors. The word “factor” here should be understood as “An element or cause that contributes to a result (from Latin facere: one who acts)” (Collins). Of course it is so much unfortunate that this can be confused with the mathematical “factor” which is one of the terms in a product...

Another problem is the following: a typical layperson does not see the world in terms of random variables. For an everyman, the phenomenon is recognized as “random” if it recurs often and has pronouncedly different results each time: such as weather, or lottery, or coin tosses, etc. Other things such as heights or IQs aren’t really seen as “random” unless you force them to stop and think about it. For this reason, writing “any random variable which is the sum of independent terms” does not convey the important message: that this is not an abstract mathematical theorem but rather an approximation for great many random things encountered in the real life.

We can try the following: By the central limit theorem, any quantity which results from an influence of a large number (at least 10–15) of independent factors, will have approximately normal distribution. … stpasha » 21:02, 5 October 2009 (UTC)

- That makes sense to me. I would slightly reformulate: By the central limit theorem, any quantity that is influenced by a large number (at least 10–15) of independent factors, will have approximately normal distribution.. Note that the last half sentence is still grammatically wrong. Unfortunately, I cannot resolve this issue. Tomeasy T C 20:10, 8 October 2009 (UTC)

Standard normal: merger?

Currently there is a separate article standard normal random variable (stub), whereas standard normal distribution redirects to the current article. I suggest that the first article be merged with the current, probably within the “Standardizing normal random variables” subsection. … stpasha » 10:12, 9 October 2009 (UTC)

Done. // stpasha » 23:57, 8 March 2010 (UTC)

Done. // stpasha » 23:57, 8 March 2010 (UTC)

Tests of normality

Some action is needed for the redlinks shown under "tests of normality" ... either creating new articles, expanding existing ones that can be linked to, or providing direct citations; Melcombe 09:10, 22 September 2009 (UTC)

Done. // stpasha » 05:54, 19 March 2010 (UTC)

Done. // stpasha » 05:54, 19 March 2010 (UTC)

See also

There seems a need to reduce the number of articles under "see also", preferably by saying something about them in the main text (if not already there}; Melcombe 09:10, 22 September 2009 (UTC)

References

More inline citations, restructuring of notes/references to more convenient form. I guess most detailed results will be findable in Johnson&Kotz so perhaps we could aim to provide page or section numbered pointers to this source. Melcombe 09:10, 22 September 2009 (UTC)

- Johnson&Kotz turned out to be pretty useless, mainly talking about the numerical approximations and which laws are derived from normal. Also their formula for entropy is wrong. We need to find some other reference, preferably the one which actually derives the results. // stpasha » 19:42, 20 May 2010 (UTC)

Kurtosis again

The use of the field "kurtosis" in the table seems not to be consistent across distributions. In some it seems to be the "normal" kurtosis and in some the excess kurtosis (-3). This is really problematic. I think it should either be named "excess kurtosis" in the table, or there should be two fields, one for each. Personally, I think one field should enough, and probably it should be the excess kurtosis, since this is usually more useful. However, it should be made clear, at least to people changing the page, that this is the excess kurtosis and not the other. If there is just one field, which is named "kurtosis" there will always be some who think, its the normal one and change it (see e.g. for the lognormal distribution, change from 21:13, 1 December 2009). Maybe it would be enough to change the template, so that it says "excess_kurtosis=..." instead of "kurtosis=...". Any other thoughts on this? Ezander (talk) 15:45, 22 February 2010 (UTC)

- Excess kurtosis definitely seems more useful. It has a nice additivity property: The excess kurtosis of i.i.d. random variables with equal variances is just the sum of their separate excess kurtoses. Michael Hardy (talk) 18:50, 22 February 2010 (UTC)

Financial variables

There is a discussion on the WikiProject Statistics talk page about the financial variables section of this article. Regardless of the merits of the recent additions, and whether they are OR, the issues raised are more about difficulties with estimating the marginal distribution of a dependent, non-stationary sequence, and less about normality per se. This content is too detailed and not sufficiently relevant to be included here. Skbkekas (talk) 16:26, 15 March 2010 (UTC)

- The content was removed from the article several days ago. // stpasha » 20:57, 21 March 2010 (UTC)

by W.J.Youden

| “ |

THE NORMAL LAW OF ERROR STANDS OUT IN THE EXPERIENCE OF MANKIND AS ONE OF THE BROADEST GENERALIZATIONS OF NATURAL PHILOSOPHY ♦ IT SERVES AS THE GUIDING INSTRUMENT IN RESEARCHES IN THE PHYSICAL AND SOCIAL SCIENCES AND IN MEDICINE AGRICULTURE AND ENGINEERING ♦ IT IS AN INDISPENSABLE TOOL FOR THE ANALYSIS AND THE INTERPRETATION OF THE BASIC DATA OBTAINED BY OBSERVATION AND EXPERIMENT ♦ |

” |

// stpasha » 23:58, 21 March 2010 (UTC)

Scores

This article says:

-

- Many scores are derived from the normal distribution, including percentile ranks ("percentiles" or "quantiles"), normal curve equivalents, stanines, z-scores, and T-scores. Additionally, a number of behavioral statistical procedures are based on the assumption that scores are normally distributed; for example, t-tests and ANOVAs (see below). Bell curve grading assigns relative grades based on a normal distribution of scores.

In what sense can it be said that z-scores and percentiles "are derived from the normal distribution"? Michael Hardy (talk) 16:16, 27 April 2010 (UTC)

- I believe it has something to do with the “laws” such that three sigma rule or six sigma rule, which are used by practitioners regardless of whether the underlying distribution is normal or not (most often this distribution is simply unknown). But you're right, this entire section is rather strange; maybe it should be moved to the applications... // stpasha » 01:15, 28 April 2010 (UTC)

Notation

The article presently has "Commonly the letter N is written in calligraphic font (typed as \mathcal{N} in LaTeX)." without a citation. All the sources I have use a non-script font and I have never seen it in a script font: it is certainly not common. WP:MSM says " it is good to use standard notation if you can" so why use something unnecessarily complicated, particulrly as there is no citation for this notation. Melcombe (talk) 13:49, 18 May 2010 (UTC)

- Among those books that I currently have, the ones using the script N are:

- Le Cam, L., Lo Yang, G. (2000) Asymptotics in statistics: some basic concepts, 2nd ed. New York: Springer-Verlag.

- Ibragimov I.A., Has’minskii, R.Z. (1981) Statistical estimation: asymptotic theory. New York: Springer-Verlag.

- Other books use: either Normal(μ, σ²), or n(μ, σ²), or N(μ, σ²). // stpasha » 16:55, 18 May 2010 (UTC)

generating a gaussian dataset

I would like to generate a set of numbers (x,y) with a known mean and CV; that is, I wish to generate a set of numbers that have a gaussian distribution, where I can set the mean and CV in advance. Thanks PS: maybe it doesn't go here, but a section on curve fitting software might help (please - no"r", if you know R, you already know a lot; stuff like IgorPro or Kaleidagraph etc, or excel thanks —Preceding unsigned comment added by 108.7.0.214 (talk) 17:34, 22 June 2010 (UTC)

- You might want to look at multivariate normal and then learn R ;) 018 (talk) 18:03, 22 June 2010 (UTC)

OK, let's start by assuming the covariance matrix is

so that ρ is the correlation. To be continued.... Michael Hardy (talk) 18:23, 22 June 2010 (UTC)

- ...before I go on, let me request a clarification. When you take a large random sample from a distribution with mean μ, then on average the mean of the sample will be μ, but each time you take a large random sample, the mean differs somewhat from exactly μ. Is that what you want to do or do you want the sample average to be exactly the specified value? And similarly for the variances and correlation? I can give you an algorithm for either of those. Michael Hardy (talk) 18:57, 22 June 2010 (UTC)

- By 'CV', do you mean coefficient of variation, or covariance? If you mean coefficient of variation, do you want x and y to be correlated, or not? Qwfp (talk) 19:22, 22 June 2010 (UTC)

Normal distribution entropy.

By definition the entropy of the Normal distribution is not negative value. But what if σ → 0 in the finite formula of entropy? Thanks. Aleksey. —Preceding unsigned comment added by Kharevsky (talk • contribs) 08:06, 5 July 2010 (UTC)

bell curve

this article, under Definition, and the one on Gaussian function contain conflicting information on the meaning of constants a and c for the "bell curve."

— Preceding unsigned comment added by 68.37.143.246 (talk) 01:52, 7 July 2010 (UTC)

Implementation section

Isn't this section a little much of a "how to" for Wikipedia? 018 (talk) 17:22, 9 July 2010 (UTC)

Great, comprehensive page on the normal distribution, almost perfect. However, the detailed section on 'Gaussian' random number generators (which is also extremely informative) really does not belong in this top-level entry. —Preceding unsigned comment added by 129.125.178.72 (talk) 16:04, 3 August 2010 (UTC)

Product of Gaussians

I was missing a reference to the product of two gaussians. This could also go into the page for the gaussian function (there is a short mention of it, no mentioning of the resulting properties), but is is also relevant here. —Preceding unsigned comment added by 134.102.219.52 (talk) 12:34, 7 September 2010 (UTC)

Gaussian Distribution is not necessarily normal?

In the opening sentence the article states that the normal distribution is also known as a Gaussian distribution. I would argue however that the normal distribution is a special case of the Gaussian distribution, i.e. one that has an integral of 1, hence why it is called normal. The Gaussian distribution is in my opinion any general distribution described by the Gaussian function

If there aren't any objections I will edit the article to reflect this schroding79 (talk) 00:08, 25 June 2008 (UTC)

- Huh? For something to be a probability distribution, it has to integrate to 1. As far as I am familiar, the common usage of the Gaussian distribution refers to it as a probability distribution. This also seems to be the definition used in the top few google searches I did for gaussian distribution. I concede that it possible that 'gaussian distribution' can be used in broader contexts (while the normal may not?), but I don't think this is the normal (ahem) way it is understood. So the opening sentence should remain, though a note (or footnote?) might be added later on, if you can find a good reference to back it up.--Fangz (talk) 00:37, 25 June 2008 (UTC)

Yes, the gaussian distribution is normal in shape. The standard normal distribution integrates to 1, whereas a frequency distribution which is normal or gaussian in shape does not necessarily integrate to 1. One aspect of interest to readers which is missing from the Wiki page about the Normal Distribution is the relationship between frequency distributions and probability distributions. Perhaps an introductory paragraph linking to Wiki pages about frequency distributions would be a good idea. It would help put this article into context. Lindy Louise (talk) 09:58, 29 September 2010 (UTC)

- There is no such thing as “Gaussian distribution is normal in shape”, the gaussian and the normal are just two synonymous names for the same distribution. A frequency distribution is merely a histogram of the random variable, it also always integrates to one. // stpasha » 17:38, 29 September 2010 (UTC)

I disagree and am curious to know why you think a frequency distribution "also always integrates to one". A frequency distribution does not always integrate to one. A probability distribution always integrates to one. This is why we normalise the normal distribution to get the standard normal distribution: the standard normal distribution integrates to one and therefore can be used as a probability distribution. This is basic stuff but is often omitted from the more esoteric textbooks. Lindy Louise (talk) 13:18, 30 September 2010 (UTC)

- Lindy Louise, you are right that a frequency plot sums/integrates to N (the number of units), but any and all probability distributions sums/integrates to one. This is not a special property of the standard normal. The integral of probability distribution over any range shows the probability that a random value drawn from the population will take on that value. If the integral (over all possible values) were anything other than one, the probability of drawing something when you drew something would be less or greater than one. 018 (talk) 15:13, 30 September 2010 (UTC)

- The article frequency distribution explicitly defines this in a way that does not add to 1, but rather to the sample size. Thus frequency distribution and "probability distribution" are different. However, both "normal distribution" and "Gaussian distribution" are, in the univariate context anyway, used with identical meanings, and either can said to represent either the probability distributions or the frequency distributions of observed data, where in the latter case there is naturally a scaling by the sample size in the interpretation of "represent". Melcombe (talk) 16:16, 30 September 2010 (UTC)

I never said the standard normal distribution was the only probability distribution that integrated to one -- obviously any probability distribution function integrates to one. Neither did I say that gaussian and normal distributions are different. I agree with Melcombe. Lindy Louise (talk) 17:16, 30 September 2010 (UTC)

- I'm confused by your meaning then when you write, "This is why we normalise the normal distribution to get the standard normal distribution: the standard normal distribution integrates to one and therefore can be used as a probability distribution." But maybe it doesn't matter. Did you want to update the opening paragraph/article? If so, how do you want to update it? 018 (talk) 18:51, 30 September 2010 (UTC)

Thanks O18 for your comment. I think I'm guilty of being too verbose, but I believe some readers confuse Normal Distribution with Standard Normal Distribution and I wanted to make the distinction. What I should have said is the Normal Distribution cannot be used directly as a Probability Distribution because the area under the Normal curve isn't equal to one. So we deliberately make the area under the Normal curve equal to one by doing some fancy maths: this normal distribution with an area of one is called the Standard Normal Distribution. It can then be used as a Probability Distribution simply because the area is equal to one. (In any probability system the sum of all the probabilities must equal one or, in other words, the area under a proability curve is equal to one.) Still verbose, sorry! Maybe I should have a go at updating the opening paragraph; I'll think about it. I was going to insert a link to Wiki pages about probability distributions and probability density functions but they're too difficult for non-mathematicians to understand, so I haven't. Lindy Louise (talk) 21:29, 30 September 2010 (UTC)

- Well, I just reread the frequency distribution article, and it says that the table of frequency distributions contains either frequencies or counts of occurrences. Also if you check the frequency article, it says there are absolute and there are relative frequencies. So whether or not the frequency distribution “integrates” to one or to n is your own choice. Also, Lindy, check the definition section: standard normal is a normal distribution with mean zero and variance one. If you want to make a distinction, then the topic you are most likely looking for is called the Gaussian function. Cheers! // stpasha » 05:38, 1 October 2010 (UTC)

If you integrate an absolute-frequency distribution you will not necessarily get unity for your answer. In fact I would think it a freak event if it were to happen! The only way you can be sure of obtaining unity by integration is if you use relative frequencies or probabilities. Hence the need for the Standard Normal Distribution, because we can be sure its integral is unity. The fact that the mean and variance of the Standard Normal Distribution are 0 and 1 is a consequence of the "normalisation" or "standardisation". The mean and variance of a Normal Distribution are not 0 and 1. That's one way of distinguishing between Normal and Standard Normal. Thanks for pointing me in the direction of the Gaussian function, but I am very familiar with the gaussian and normal functions (they're the same).Lindy Louise (talk) 21:10, 10 December 2011 (UTC)

Error in Fisher Information

Calculating out by hand, the Fisher Information in the top right box seems incorrect and should instead be Khosra (talk) 21:33, 9 September 2010 (UTC)

- I suggest you redo your calculations. Note that the “Estimation of parameters” section gives that , and — under the efficient estimation, the variance matrix of the parameter must be equal to the inverse of the Fisher information matrix. // stpasha » 08:06, 11 September 2010 (UTC)

About the lead

There used to be the time when the article started with “In probability theory, normal distribution is a continuous probability distribution which is often used to describe, at least approximately, any variable that tends to cluster around the mean”. Some people tend to revert the intro back to this sentence from time to time, which is why I think an explanation is due why such sentence is inappropriate in an encyclopedia.

First it must be stated that the distribution is not merely continuous, but absolutely continuous. Absolute continuity implies that the distribution possesses density, whereas simple continuity means very little. Second, about the “any variable that tends to cluster around the mean”. This is not an informative statement. Any unimodal distribution can be said to “cluster around the mean”, and some non-unimodal distributions too. This statement is so loose that it fails to describe anything. Finally, “is often used to describe, at least approximately” is a weasel-phrase. No serious researcher will use normal distribution to describe his data, unless he has good reasons to believe that the data IS actually normally distributed. There is a good quote from Fisher about this, see the Occurrence section. // stpasha » 09:23, 2 October 2010 (UTC)

- When I put that statement there, I had no idea that it had a history of being there. It's just that the former phrase, which I see someone has reverted, is just utterly, absolutely horrible:

- In probability theory and statistics, the normal distribution, or Gaussian distribution, is an absolutely continuous probability distribution whose cumulants of all orders above two are zero.

- Keep in mind, Stpasha, that this sentence may sound fine to you, an expert in statistics, but to the average reader, it simply makes no sense. It is characteristic of a nasty trend in so many technical articles on Wikipedia, which is that they are written by experts for experts. The number of experts in any field is miniscule compared to the number of non-experts, and in any case, an expert in statistics is not likely to go reading the Wikipedia article on the normal distribution to figure out what it is. Imagine if you are an average non-expert, who might conceivably have some idea of what a probability distribution is, but maybe not, and certainly not much more -- reading this sentence you're going to think "What the hell? What does 'absolutely continuous' mean? What are 'cumulants'?" If you read the link to absolutely continuous, it makes no sense to a non-expert. Likewise for cumulants. The lead sentence is an introduction that is supposed to tell the average non-expert what a topic is about. My old lead sentence read essentially “In probability theory, the normal distribution is a continuous probability distribution which is often used to describe, at least approximately, any real-valued random variable that tends to cluster around the mean”. (Note, I added "real valued" and "random".) It tells you

- This is a continuous distribution, used to describe a real number (as opposed to a discrete distribution, a multi-variate distribution, etc.).

- This is a very common distribution, often used as a first approximation in statistics to describe any single-peaked distribution (as opposed e.g. to a multi-peaked distribution).

- Both of these facts may seem so obvious to you as not to even merit mentioning, but they are exactly what a non-expert doesn't know but needs to know. I am not opposed to other formulations of these two facts, but any lead must mention these basic facts. If you disagree with the second fact as I've stated it, figure out some other way to express it that satisfies you, but don't take it out. As for your comment about "no serious researcher ...":

- Beware of the "no true Scotsman" fallacy.

- It doesn't address the essential point, which is "as a first approximation". Plenty of statistical techniques use the normal distribution as an approximation. MCMC often uses a Gaussian as a proposal distribution. Laplace approximation approximates a posterior distribution with a Gaussian centered around the mode. Etc.

- Cumulants and absolute continuity are both advanced topics that are irrelevant to the vast majority of users and hence simply do not belong in the lead. (Note that your average college-level intro statistics course doesn't even mention either of these topics.) As for your comment about "continuous" being meaningless, I respectfully must disagree -- in common (non-expert) statistical parlance, "continuous" is the opposite of "discrete" and means that a distribution is defined by a density function as opposed to a probability mass function. This means quite a lot. If you want to state that the distribution is absolutely continuous, or has no non-zero cumulants except the first two, or any other statement that pleases experts but has no meaning to non-experts, fine -- but not in the lead. Benwing (talk) 22:21, 2 October 2010 (UTC)

BTW, Stpasha, you might want to check out the pages WP:TECHNICAL and Wikipedia:Lead section#Introductory text, which provide guidelines on how technical articles, and particularly the lead sections, should be written. Benwing (talk) 23:01, 2 October 2010 (UTC)

- I agree strongly with Benwing. This is one of the top hits in stats and the lead says, "go away--we don't want you, this article is just for mathematicians." I really can't make any sense of stpasha's comments about continuity vs absolute continuity. Remember, when you write something, it is to communicate something to someone else. Do you seriously believe that there exists a person who (a) understands the distinction, and (b) doesn't know that the normal is absolutely continuous? Obviously, this fact belongs way, way down in the article. 018 (talk) 00:12, 3 October 2010 (UTC)

- I do understand that the cumulants are hard to digest, but it is the only possible way to actually define the distribution without using formulas. In most textbooks they would simply state that a normal distribution is the one with the following pdf:, and provide a formula. When you say that normal distribution “is the distribution that is often used to describe ...”, then this sentence is merely a description, not a definition. It’s like if you were writing an article about tomatoes, you’d start it with “Tomatoes are fruits that are red in color.” There are some guidelines about what the first sentence should look like, see WP:LEAD#First sentence, in particular the “If the subject is amenable to definition ...” part.

- I agree that an average reader will probably not understand what these cumulants are about. But at least the reader will know that there is something here that he doesn't understand. If you write the first sentence the way you do, then the reader will simply learn that normal distribution is the one which everybody uses, and he won’t be any wiser as to what it actually is. Moreover, he probably won’t understand the fact that he still doesn’t know what the distribution is.

- As for the absolute continuity — current Wikipedia articles don’t do a good job in explaining what that is. And in fact it might be beneficial to adopt the other terminology convention and to rename absolute continuity into simple continuity, as many probability theory textbooks do. Note also that there are in fact 3 “pure” types of random variables: continuous, discrete, and singular. The last one nobody talks about because they are impractical and very inconvenient to analyze.

- Lastly, about the “first approximation”. The normal distribution is indeed used as an approximation. Especially in the college-level textbooks and examples. The reason for this is that the normal distribution is to a certain extent the “simplest” statistical distribution. And incidentally, what makes it “simple” is the fact that it has only two nonzero cumulants. Now, this reasoning goes way deeper than any regular textbook, but it is so. And it must also be mentioned that there is no “next step” approximation -- that is, there are no distributions with only 3 nonzero cumulants, or only 4, etc.

- As for the true Scotsmen — it is a common knowledge that you really don’t want to impose such assumption in your research, unless there is just no way around it. The times have past when simple approximations where sufficient in research, now they are left only in exercises and problem sets. Note that MCMC method doesn’t assume anything — it merely uses normal as the transition density, which is done for convenience, as the result of the method does not depend on this choice. The Laplacian estimator uses the fact that certain objectives allow for quadratic expansion around the point of maximum, which translates into local asymptotic normality and normal approximation. There are some objectives (e.g. maximum score estimator) which do not allow such quadratic expansion, for those estimators the distribution of the Laplacian estimator will be drastically different (and more complicated). // stpasha » 00:56, 3 October 2010 (UTC)

- stpasha, The first sentence of the guide you linked to reads, "The article should begin with a declarative sentence telling the nonspecialist reader what (or who) is the subject." mentioning cumulants totally fails this requirement. You are also confusing a definition with what a mathematician calls a definition. I'd also point out that you are thinking of this from a very narrow part of a narrow part of the world (people with Ph.D.s in mathematics). This article is intended for a much broader audience. 018 (talk) 03:28, 3 October 2010 (UTC)

- Well, the sentence with cumulants might be failing on the “nonspecialist” part, but the current sentence is failing on the “what the subject is” part, which is more serious. I’d be happy to have a nontechnical lead, but we cannot think of one. I did not understand your remark about the definition — do you think that current first sentence actually defines something? // stpasha » 05:41, 3 October 2010 (UTC)

- Stpasha, the problem here I think is that you're misinterpreting what the guideline says. What it says exactly is "tell what the subject is"; it doesn't say "define the subject precisely and in a way that uniquely characterizes the subject". These are two entirely different things. In fact, very few statistics articles include a rigorous definition of their subject in the lead. As an example, the Student's t distribution says

- In probability and statistics, Student's t-distribution (or simply the t-distribution) is a continuous probability distribution that arises in the problem of estimating the mean of a normally distributed population when the sample size is small.

- IMO this is a well-written lead and I think most of the highly experienced WP editors would agree. This lead does not define precisely what the distribution is in a mathematical sense, but instead defines it pragmatically by describing (1) the basic properties, and (2) one of its most common uses. Note also that the guideline specifically says "[tell] the nonspecialist reader". Everything in the intro needs to be geared to the nonspecialist. This principle is emphasized over and over in all the guidelines and is by far the most important principle to stick to. In addition, as for your comment about readers not ever learning what the normal distribution "is", this doesn't make any sense to me. Note that the p.d.f. formula is given a sentence or two down. Furthermore, a definition specified in terms of cumulants is not going to help a reader who doesn't know what a cumulant is, and even if they manage to remember the cumulant-based definition, it can't reasonably be said that they "know" what the definition is. As for your comment about cumulants being "the only way to define the distribution without formulas": First, I don't see the point of this. If avoiding formulas makes the definition harder to understand than using them, by all means use them. Second of all, I don't even think this statement about cumulants is true, as you can also define the normal distribution through maximum entropy, through the central limit theorem, etc. Benwing (talk) 07:43, 3 October 2010 (UTC)

- Stpasha, the problem here I think is that you're misinterpreting what the guideline says. What it says exactly is "tell what the subject is"; it doesn't say "define the subject precisely and in a way that uniquely characterizes the subject". These are two entirely different things. In fact, very few statistics articles include a rigorous definition of their subject in the lead. As an example, the Student's t distribution says

- Well, the sentence with cumulants might be failing on the “nonspecialist” part, but the current sentence is failing on the “what the subject is” part, which is more serious. I’d be happy to have a nontechnical lead, but we cannot think of one. I did not understand your remark about the definition — do you think that current first sentence actually defines something? // stpasha » 05:41, 3 October 2010 (UTC)

- stpasha, The first sentence of the guide you linked to reads, "The article should begin with a declarative sentence telling the nonspecialist reader what (or who) is the subject." mentioning cumulants totally fails this requirement. You are also confusing a definition with what a mathematician calls a definition. I'd also point out that you are thinking of this from a very narrow part of a narrow part of the world (people with Ph.D.s in mathematics). This article is intended for a much broader audience. 018 (talk) 03:28, 3 October 2010 (UTC)

History

Please see Anders Hald : A History of Parametric Statistical Inference from Bernoulli to Fisher, 1713-1935. He has different views than Stigler.

1774 : asymptotic normality of the posterior distribution, derivation of the constant of the normal distribution (page 38). This is the first justification of the normal distribution and first appearance of the Bayesian Central Limit Theorem

1785 : further results (page 44)

- And what makes you think that Hald is always right or clear? His book gives quite wrong impression regarding the accomplishments of Laplace. Laplace (1774) JSTOR 2245476 considered the posterior distribution in a simple binomial experiment. However he did not show asymptotic normality of the posterior, merely that its expected value converges to the “truth” with probability one. He estimated the probability of this difference being different from the probability limit, but his final formula (on p.369) is nowhere close to resembling the normal distribution. He did derive the value of the integral ∫dμ/√(lnμ), which after a change of variables becomes the Gaussian integral, however this article already mentions the fact that the integral was first computed by Laplace; and Gauss mentioned that too in his tract.

- I did not check the Laplace (1785) memoire, since I cannot find it in English translation. But it is highly unlikely he actually derived the normal distribution there. Also, Hald himself states the following: “The second revolution began in 1809-1810 with the solution of the problem of the mean, which gave us two of the most important tools in statistics, the normal distribution as a distribution of observations, and the normal distribution as an approximation to the distribution of the mean in large samples.” (p. 3). From this I conclude that the normal distribution was not actually known before 1809 as the distribution per se. // stpasha » 07:06, 4 October 2010 (UTC)

- Hello stpasha. I am not so knowledgeable but here is my point of view. 1) The article does not mention that Gauss has read Laplace (1774). Bayes (1763) was not known to the mathematicians including Laplace until the 1780's. So Gauss learned the Bayesian paradigm from Laplace (1774).

- 2) Stigler in the introduction to his translation of Laplace (1774) actually mentions the asymptotic normality of the posterior.

- 3) Laplace proved like De Moivre the validity of an approximation in large samples. This approximation is made general in Laplace (1786) and Laplace (1790a). I follow Hald's bibliography. Laplace (1790a) is a general version of the Bayesian Central Limit Theorem. Until Laplace (1790a), Laplace had not read Gauss's book. In Laplace (1786) and Laplace (1790a), the expression of the normal density is explicit. Laplace knows he approximates one distribution by another one (the integral value is 1).

- 4) By doing so, he only proves the asymptotic normality of the posterior. So the normal distribution is not yet a distribution of the errors like the Laplace distribution (1774). This is Gauss's work. However Laplace had already shown the importance of the normal distribution, as the asymptotic posterior. This is the first point of the quotation of Hald.

- 5) The normal distribution was not yet the distribution of the mean in large samples. I agree. This is later work by Laplace when he switched to the frequentist paradigm. This is the second point of the quotation of Hald.

- — Preceding unsigned comment added by 193.171.33.67 (talk) 22:16, 5 October 2010 (UTC)

- Hello 193.171.33.67. I’m not a history expert myself, but when researching this subject I came to realize few things: (1) it is best not to rely on either Stigler’s or Hald’s opinions unless you can verify their claims by looking at the original papers, (2) history is subject to interpretation: two people using the same facts may come to different conclusions, (3) sometimes it is hard to tell whether an author understood the results of his work the same way as we understand them now (4) a publication must be judged not only by what it says, but also on what actual impact it had (poor Adrain, I feel sorry for him).

- That said, we have sources for the following publications: De Moivre (1733), Laplace (1774), and Gauss (1809). I cannot find the source for either Laplace (1786) or Laplace (1790), so there is no point in speculating what’s in there.

- Now, for Gauss the relevant sections are 175−178. He says (p.254): “the probability to be assigned to each error Δ will be expressed by a function of Δ which we shall denote by φΔ … the probability that an error lies between the limits Δ and Δ+dΔ differing from each other by the infinitely small difference dΔ, will be expressed by φΔdΔ; hence the probability generally, that the error lies between D and D′, will be given by the integral ∫φΔdΔ extended from Δ=D to Δ=D′.” Thus, in his notation φ is explicitly and unambiguously a probability density function.

- After some manipulations, Gauss concludes (p.258−259) that: “… and since, by the elegant theorem first discovered by Laplace, the integral ∫e−hhΔΔdΔ from Δ=−∞ to Δ=+∞ is √π⁄h, (denoting by π the semicircumference of the circle the radius of which is unity), our function becomes

- ”

- As you see, Gauss does cite the Laplace when it comes to the integral (which nowadays is unfairly called the Gaussian integral). However we also see that Gauss does not cite anybody regarding his function φ.

- And although this function φ was known earlier to de Moivre and Laplace, under different disguises, Gauss was the first to actually interpret it as a probability density function, as a random variable. And it is from his work that this distribution became widely known in the scientific community, and why it was called the Gaussian; whereas de Moivre’s “pamphlet for a private circulation” doesn't count. // stpasha » 23:31, 9 October 2010 (UTC)

US adult males: revisited

Can we have a less controversial example in the lead? Current one (the heights of US adult males) isn't supported by a reference, and also contradicts a later claim in the article that the sizes of biological species are distributed approximately log-normally. Besides, “US adult males” is not a sufficiently homogeneous group: variability due to race / ethnicity make it a mixture of several log-normal distributions. // stpasha » 05:33, 5 March 2010 (UTC)

- In addition to that, the phrase also presents a broken thought: "For example, the heights of adult males in the United States are roughly normally distributed, with a mean of about 70 in (1.8 m)" In what? And 70 what? Bananas? —Preceding unsigned comment added by 201.95.183.186 (talk) 00:24, 7 March 2010 (UTC)

- Those are inches. US adult males are just weird that way :) // stpasha »

Long ago I suggested to remove this paragraph from the lead, which suggestion was refuted on the basis that it is “the only generally understandable information” there. Now that the lead has been improved in readability, maybe it’s ok to get this piece finally out? // stpasha » 02:19, 9 October 2010 (UTC)

Zero variance

I think maybe we should alter the definition to allow normal distributions with 0 variance. This is needed for consistency with the “Multivariate normal distribution” article, where we say that a random vector X is distributed normally if and only if every linear combination of its components cX has univariate normal distribution. Since this linear combination can potentially have zero variance, such case must be allowed within the current article.

The cons for such inclusion are that we'll need to define pdf and cdf separately for the case σ² = 0. … stpasha » 20:43, 24 November 2009 (UTC)

- Yes I think this is the best option. Have two definitions for each of the PDF (as it is done now) and CDF (to do) functions with the explicit mention in the text (as it is) that they are generalised functions used to model the special case for sigma=0. This needs to be stated explicit because at the moment the article gives the impression that from the usual Gaussian pdf we can derive this particular behaviour (degenerate distribution with all the mass in mu) if we set sigma=0. —Preceding unsigned comment added by 130.236.58.84 (talk) 10:13, 10 October 2010 (UTC)

- I think this subject should reopen. You cannot unilaterally define special formulations for the cdf and pdf as you wish in order to take care for the zero variance case. I am against such formulations that have nothing to do with the original CDF and the integral. Similar simplifications can be made for many other distributions. — Preceding unsigned comment added by 130.236.58.84 (talk) 08:54, 9 October 2010