User:Avazirani/sandbox

This article has multiple issues. Please help improve it or discuss these issues on the talk page. (Learn how and when to remove these template messages)

|

In machine learning, the study and construction of algorithms that can learn from and make predictions on data[1] is a common task. Such algorithms work by making data-driven predictions or decisions,[2]: 2 through building a mathematical model from input data.

The data used to build the final model usually comes from multiple datasets. In particular, three data sets are commonly used in different stages of the creation of the model.

The model is initially fit on a training dataset,[3] that is a set of examples used to fit the parameters (e.g. weights of connections between neurons in artificial neural networks) of the model.[4] The model (e.g. a neural net or a naive Bayes classifier) is trained on (or created using) the training dataset using a supervised learning method (e.g. gradient descent or stochastic gradient descent). In practice, the training dataset often consist of pairs of an input vector and the corresponding answer, or response vector or scalar, which is commonly denoted as the target. This is what the model is attempting to predict. The current model is run with the training dataset and produces a result (the model's prediction), which is then compared with the target, for each input vector in the training dataset. Based on the result of the comparison and the specific learning algorithm being used, the parameters of the model are adjusted. The model fitting can include both variable selection and parameter estimation.

Successively, the fitted model is used to predict the responses for the observations in a second dataset called the validation dataset.[3] The validation dataset provides an unbiased evaluation of a model fit on the training dataset while tuning the model's hyperparameters [5] (e.g. the number of hidden units in a neural network[4]). Validation datasets can be used for regularization by early stopping: stop training when the error on the validation dataset increases, as this is a sign of overfitting to the training dataset.[6] This simple procedure is complicated in practice by the fact that the validation dataset's error may fluctuate during training, producing multiple local minima. This complication has led to the creation of many ad-hoc rules for deciding when overfitting has truly begun.[6]

Finally, the test dataset is a dataset used to provide an unbiased evaluation of a final model fit on the training dataset.[5]

Training dataset[edit]

A training dataset is a dataset of observations used for learning, that is to fit the parameters (e.g., weights) of a model, for example, a classifier, that predicts an outcome or response.[7][8]

Most approaches that search through training data for empirical relationships tend to overfit the data, meaning that they can identify apparent relationships in the training data that do not hold in general.

Validation dataset[edit]

A validation dataset is a set of examples used to tune the hyperparameters (i.e. the architecture) of a classifier. In artificial neural networks, an hyperparameter is, for example, the number of hidden units.[7][8] It, as well as the testing set, should follow the same probability distribution as the training dataset.

In order to avoid overfitting, when any classification parameter needs to be adjusted, it is necessary to have a validation dataset in addition to the training and test datasets. For example, if the most suitable classifier for the problem is sought, the training dataset is used to train the candidate algorithms, the validation dataset is used to compare their performances and decide which one to take and, finally, the test dataset is used to obtain[citation needed] the performance characteristics such as accuracy, sensitivity, specificity, F-measure, and so on. The validation dataset functions as a hybrid: it is training data used by testing, but neither as part of the low-level training nor as part of the final testing [citation needed].

The basic process of using a validation dataset for model selection (as part of training dataset, validation dataset, and test dataset) is:[8][9]

Since our goal is to find the network having the best performance on new data, the simplest approach to the comparison of different networks is to evaluate the error function using data which is independent of that used for training. Various networks are trained by minimization of an appropriate error function defined with respect to a training data set. The performance of the networks is then compared by evaluating the error function using an independent validation set, and the network having the smallest error with respect to the validation set is selected. This approach is called the hold out method. Since this procedure can itself lead to some overfitting to the validation set, the performance of the selected network should be confirmed by measuring its performance on a third independent set of data called a test set.

An application of this process is in early stopping, where the candidate models are successive iterations of the same network, and training stops when the error on the validation set grows, choosing the previous model (the one with minimum error).

Selection of a validation dataset[edit]

Holdout method[edit]

Most simply, part of the training dataset can be set aside and used as a validation set: this is known as the holdout method[citation needed]. Common proportions are 70%/30% training/validation[citation needed].

Cross-validation[edit]

Alternatively, the hold out process can be repeated, repeatedly partitioning the original training dataset into a training dataset and a validation dataset: this is known as cross-validation. These repeated partitions can be done in various ways, such as dividing into 2 equal datasets, and using them as training/validation, and then validation/training, or repeatedly selecting a random subset as a validation dataset[citation needed]. This approach of partitioning the data into several subsets, then dividing each subset into a training a validation set, is commonly referred to as k-fold cross validation where the user defines k, the number of subsets.

Another method is leave p-out cross validation. In this method, k-fold cross validation is performed and, within each fold, the holdout method is performed - in each fold, the data that is "held out" is used as the validation set and the rest is the training set. Similarly to the parameter k, p is defined by the user. Therefore, in each fold, p observations are set aside and used as the validation set. A common convention of this method is leave one-out cross validation (LOOCV). In this case, p = 1 and number of folds are equal to the number of observations, n. This means that the method is performed n times, using all but one observation to train the data and predicting the outcome of the one, left out, observation. For example, if we had a dataset with 100 observations that we were trying to build a classifier for, leave one out cross validation would mean training the data on all but one of the observations 100 times, leaving a different observation out and predicting its outcome each time.

Test dataset[edit]

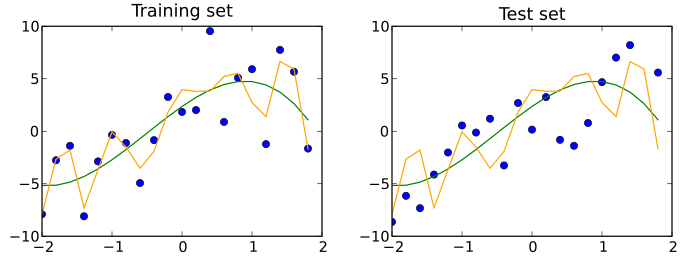

A test dataset is a dataset that is independent of the training dataset, but that, similarly to the validation dataset, follows the same probability distribution as the training dataset. If a model fit to the training dataset also fits the test dataset well, minimal overfitting has taken place (see figure below). A better fitting of the training dataset as opposed to the test dataset usually points to overfitting.

A test set is therefore a set of examples used only to assess the performance (i.e. generalization) of a fully specified classifier.[7][8]

See also[edit]

References[edit]

- ^ Ron Kohavi; Foster Provost (1998). "Glossary of terms". Machine Learning. 30: 271–274. doi:10.1023/A:1007411609915. S2CID 36227423.

- ^ Machine learning and pattern recognition "can be viewed as two facets of the same field."

- ^ a b James, Gareth (2013). An Introduction to Statistical Learning: with Applications in R. Springer. p. 176. ISBN 978-1461471370.

- ^ a b Ripley, Brian (1996). Pattern Recognition and Neural Networks. Cambridge University Press. p. 354. ISBN 978-0521717700.

- ^ a b Brownlee, Jason. "What is the Difference Between Test and Validation Datasets?". Retrieved 12 October 2017.

- ^ a b Prechelt, Lutz; Geneviève B. Orr (2012-01-01). "Early Stopping — But When?". In Grégoire Montavon; Klaus-Robert Müller (eds.). Neural Networks: Tricks of the Trade. Lecture Notes in Computer Science. Springer Berlin Heidelberg. pp. 53–67. doi:10.1007/978-3-642-35289-8_5. ISBN 978-3-642-35289-8. Retrieved 2013-12-15.

- ^ a b c Ripley, B.D. (1996) Pattern Recognition and Neural Networks, Cambridge: Cambridge University Press, p. 354

- ^ a b c d "Subject: What are the population, sample, training set, design set, validation set, and test set?", Neural Network FAQ, part 1 of 7: Introduction (txt), comp.ai.neural-nets, Sarle, W.S., ed. (1997, last modified 2002-05-17)

- ^ Bishop, C.M. (1995), Neural Networks for Pattern Recognition, Oxford: Oxford University Press, p. 372

External links[edit]

- FAQ: What are the population, sample, training set, design set, validation set, and test set?

- What is the Difference Between Test and Validation Datasets?

- What is training, validation, and testing data-sets scenario in machine learning?

- Is there a rule-of-thumb for how to divide a dataset into training and validation sets?